The History of the Personal Computer

Sheila Heti on the birth, rise, and decline of the personal computer.

The personal computer came into existence because, at a time when the idea seemed far-fetched, certain individuals wanted so passionately to have a computer of their own that they just made it happen. Computer power to the people! was their rallying cry.

What was it like, the whole development phase?

Alan: “We were clutching at straws! You’ll do anything when you don’t know what to do.”

Alan Kay was one of the earliest prophets of the personal computer. He believed the computer’s destiny was “to disappear into our lives like all the really important technologies,” things we don’t even think of anymore as technologies, like watches, and paper and pencil, and clothing and books, and all of these things. He told people, “I think the computer’s destiny is to be like one of those.”

This, then, is the story of the personal computer: its birth, its rise to power and influence, and its recent decline. The personal computer still exists, of course, but in an important sense its era—the era of the tinkerer, the explorer, the pirate—is over.

In the 1970s and 1980s, the personal computer started appearing in people’s homes, much like a band of mice invading a small town. At first it was strange to see a mouse inside; then, by the 1990s, it was strange to see a house without one. Computers were used for playing games, for word processing, for basic graphic design—such as making posters you could put up around your neighborhood, announcing a yard sale, or for designing a birthday card—and of course for computation. Before that, only people in the field of computer science owned computers, and before that, computers were so large they could only be stored in an even larger university, where the people who studied computer engineering had to take turns with the machine, feeding punch cards of their programs into the computer in shifts, waiting sometimes twenty-four hours for a simple output, usually telling them there was an error in their code.

The earliest computer programs were designed by mathematicians and scientists who thought the work of communicating with the machine would be straightforward and logical. They found themselves aggravated to discover that writing usable software was much harder than they had hoped. Computers were stubborn: they insisted on doing what the person said, rather than what the person meant. As a result, a new class of artisans took over from the mathematicians: these were the computer scientists—the programmers—who figured out how to talk to the computers in a language the machines could understand, and who had a facility with it, and who found it fun.

“Software is actually an art form,” Alan Kay told a reporter from Scientific American. “It’s a meticulously crafted literature that enables complex conversations between humans and machines. Software is really a kind of literature, written for both computers and people to read.”

In a sense, computer scientists were the writers, the artists, of these new worlds the computers would simulate. Later, the wizard Jaron Lanier would define the three most important new art forms of the twentieth century as “film, jazz, and programming.”

Naturally, anything that is larger than human scale will soon evoke mechanisms akin to religion, and so rather quickly there was a priesthood of programmers. Some ordinary citizens thought computers would take over the world: some citizens wanted them to take over the world, and some citizens feared they would take over the world.

That is not what the computer industry wanted people to be thinking about: you can’t sell a terrifying demon to the common consumer. It’s even hard to sell a God. And so knowledgeable people in different places began saying, “No, this computer thing has the potential of being a partner of humanity, a complementary partner. It can do some things that we can’t, and vice versa.” But how could they demonstrate this? They said to one another, “we should think of some ways of bringing the computer down to human scale, otherwise people will continue to be too scared of it.”

That is where Alan Kay and many others came in. Alan is perhaps a lesser figure in a story that involves more famous names, but because he is everywhere less mythologized, he is easier to see. Alan and his friends came up with the Dynabook. He admitted to Scientific American that the Dynabook was “sort of a figment of the imagination, a Holy Grail that got us going.” It was never a computer, but rather a cardboard model that, as he jokingly put it, “allowed us to avoid having meetings about what we were trying to do.”

Before discussing the model, it is worth saying something about what a computer actually is. The easiest way to think about a computer is mostly as memory. Then, there is a very small amount of logic that carries out a few basic instructions.

Many people who haven’t studied mathematics probably haven’t thought about the notion of an algorithm, but it’s simply a process for producing and moving symbols around. So a computer has a few basic properties: memory, the ability to put symbols into its memory, the ability to take them out again, and the ability to change them. This conceptual framework is enough to simulate any computer that has ever existed, or will ever exist.

Of course, abstract means of storing, retrieving, and manipulating information (symbols) have been in existence since the beginning of humankind: speech is one such method, art and writing another. Even when we are not using tools to manipulate symbols, we’re dealing with the world through intermediaries. After all, we can’t fit the whole world into our brains. Therefore, we are forced to make abstractions. Because the world exists as a model in our minds, we are actually living in a kind of waking hallucination. The reality we exist in is already virtual!

That takes care of the word “computer,” but what about the word “personal”? Personal means something that it is owned by its user, costs no more than a TV, and is portable: the user can easily carry the device around, as they carry other things at the same time. At this point, Alan Kay asked the reporter from Scientific American, “need we add that it be usable in the woods?”

There were three renderings of the personal computer—or Dynabook—that Alan and his friends considered: one resembled a spiral-bound notebook, another was something that went in your pocket and had a head-mounted display and glasses, and finally there was a sensitive wristwatch that, twenty years or so in the future, would ideally be able to connect to a network—in any room that had an outlet in the wall—and would contain an interface that would also move from room to room, as you went. At issue was less which model the Dynabook would resemble, than what these models meant: a computer you could have an intimate, casual, and mundane relationship with. Alan spoke about how “a human should be able to aspire to the heights of humanity” on such a device. “But just as a person with paper and a pen might aspire to the heights of Shakespeare, that doesn’t mean you have to be Shakespeare every time you write something on a piece of paper.”

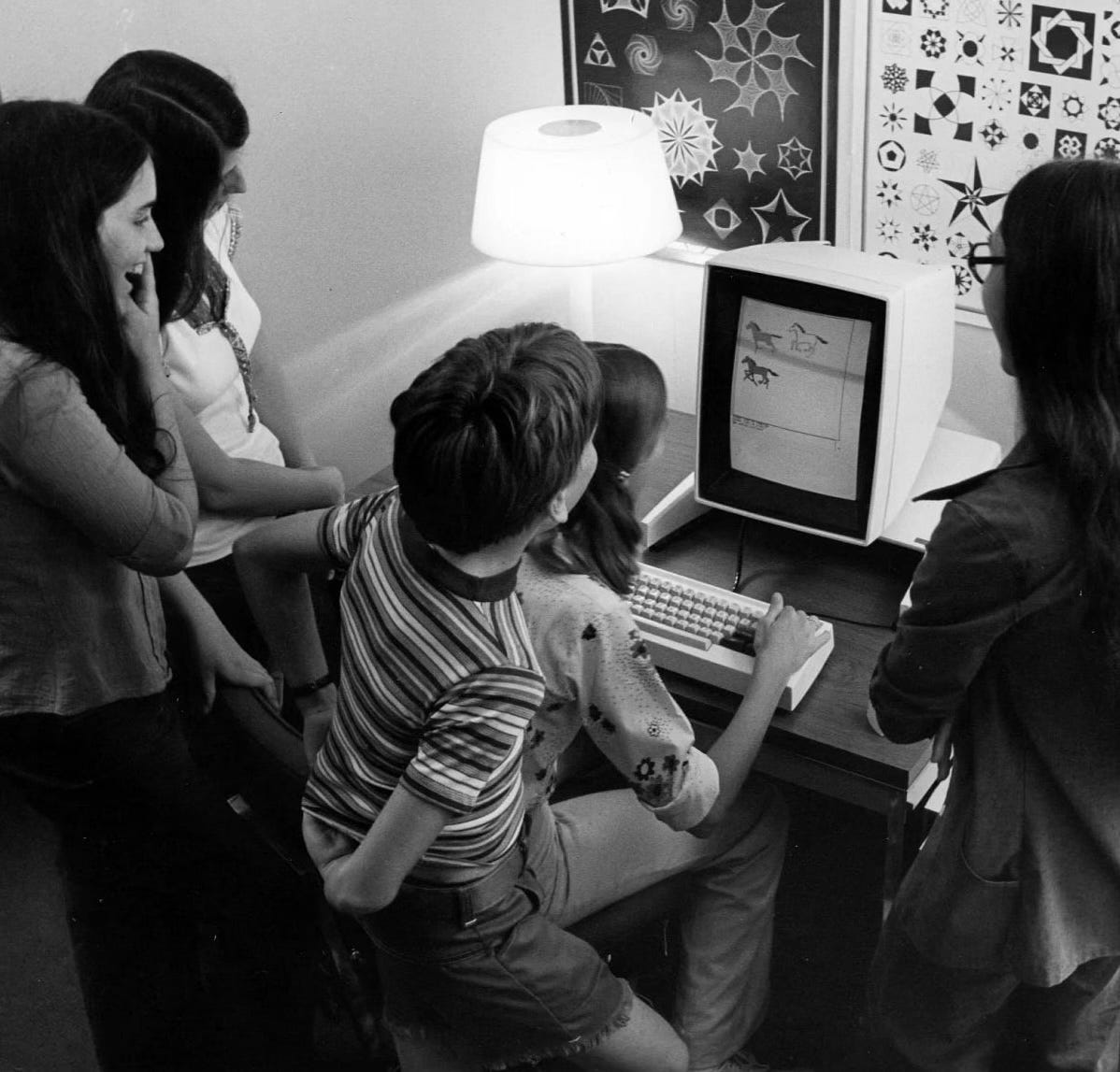

All the other tinkerers were asking themselves the same question: how to make the computer something intimate, friendly, and accessible? Also, what might people want to do with a computer if they could carry it around? The problem, as Alan put it, was that programmers at the time “lived in this tiny world, full of phrases we learned when we were taking math classes, and it was hermetic, and it was full of people who liked to learn how to do complicated things, and who delighted in it.” One of the hardest things for them to accept was that the common, commercial user was not like that at all. “It became the number one dictum of user interface design: the user is not like us.” They needed a way of shocking themselves into fully accepting this realization, and the way to do this, they decided, was to present the prototype to children.

And so they did—two-hundred and fifty of them, ages six to fifteen. Alan and the other programmers watched how the children approached the computer, which they hoped would expand their ideas of what the computer could be.

Alan recalled about this phase of testing, and the next, “We discovered that whether it was children or adults who first encountered a personal computer, most of them were already involved in pursuits of their own choosing, so the initial impulse was always to exploit the system to assist them in things they were already doing. An office manager might automate paperwork and accounts. A child might work on ways to create pictures and games. People naturally started building personal tools. Although man has been characterized as the toolmaking species, toolmaking has historically been in the hands of specialists. But we began to see that with the computer, toolmaking could become democratic, something anyone could do. Composers might choose to make the computer into a tool that allowed them to hear their compositions as they composed. Businessmen might turn the computer into a briefcase containing a working simulation of their company. Educators might use a computer to engage students in a Socratic dialogue, complete with graphic animations. Homemakers might use it to store and manipulate accounts, budgets, receipts and reminders. Children could turn it into an active learning tool with access to larger stores of knowledge than could be available in any home library.” This was 1967.

“I said, ‘Okay, so twenty years from now we’ll have a notebook-sized computer that will not only do all sorts of wonderful things, but mundane things too.’ And so one of the questions we asked ourselves was, ‘What kind of computer would it have it to be for you to want to do some grand things on it, but also mundane things, like writing a grocery list—and for the computer to be something you’d be willing to carry into a supermarket, and carry out with a couple of bags of groceries?’ Of course, you could write your shopping list on paper. The question was not whether you could replace paper or not. The question was whether you could cover the old medium with the new, and then find all these marvelous extra things to do with the new medium that you couldn’t do with the old.”

Alan liked the analogy of a book, “because with the book you have several stages: you have the invention of writing, which is a huge idea, and the difference between having it and not having it is enormous. And the difference between having computers—even if they’re big mainframes—and not having them, is also enormous. The next stage was the Gutenberg stage, and as McLuhan liked to say, ‘when a new medium comes along, it imitates the old.’ So Gutenberg’s books were the same size as those beautiful illuminated manuscripts that were written by hand by monks. They were big! At the same time, there were so few books in a given library that each actually had its own reading table. You know, you go into the library, you go over to the table that the particular book is at, and they are chained to the tables because they are so valuable—they were priceless! Gutenberg could of course produce numerous books, but he didn’t know what size they should be, and it wasn’t until some decades later that Aldus Manutius, a Venetian publisher, decided that books should be this size—the approximate size they are today. He decided on that size because that was the size of the saddle bags in Venice in the late 1400s. So the key idea Aldus had was that books could now be lost. It was okay. They were cheap! And because books could now be lost, they could also be taken with you. I think, in a very important respect, where we are today with the computer is where the book was before Aldus. Because the notion that a computer can be lost is not one we like yet. You know, we protect our computers. We bolt them to the desk and so forth.” This was the 1970s. “They are quite expensive. They’ll really be valuable once we can lose them. Once we are willing to lose them, then they’ll be everywhere.”

Before Alan got into computers, he was interested in theatre. And theatre influenced his thinking quite a lot. “I mean, theatre is actually kind of an absurd setup,” he said, “because you have a balding person in his forties impersonating a teenager in triumph, holding a plastic skull in front of some wooden scenery, right? It can’t possibly work! But it does—all the time! And the reason it does is because the theatrical experience is a mirror that blasts the audience’s intelligence back at them. It’s like somebody once said, ‘People don’t come to the theatre to forget, they come tingling to remember.’ What theatre is is an evoker. So what we do in the theatre is take something impossibly complex, like human existence or something, and present a person as something more like archetype, which the user’s mind can resynthesize human existence out of.”

“I think it was Picasso who said that art is not the truth, but rather a lie that tells the truth. This is true of the art of theatre,” he continued, “and I realized that in order for the computer to spread through society, this had to become true of the computer, too. Computers couldn’t remain ‘the truth that tells the truth,’ an ugly, heavy box with a black screen and digital, green or amber letters—apparently and in fact a mechanical, code-reading machine which only special people can communicate with in a difficult, formal language. So I began to think, what if the interface between the user and the computer was less literal, and more of a theatrical lie?”

“To speak to each other about this idea, my friends and I invented the term user illusion. I don’t think we ever used the term user interface, at least not in the early days. But the idea was: What can you do theatrically—before the user’s eyes—that will create the precise amount of magic you want the person to experience? Because the question in the theatre is: how do you dress up the stage to set expectations that will allow the story to feel true to the audience? Similarly, giving the user appropriate cues has to be central to the interface design. It’s all about directing the user into particular ways of perceiving.”

“My friend Jerome often spoke about how we have different ways of knowing the world: one of them is kinesthetic, one of them is visual, one of them is symbolic. “That’s why,” Alan said, “we devised the concept of the mouse. We weren’t just thinking of ways of indicating things on a screen. The broader point was, how do we give the user kinesthetic entry into this world—the world of the computer? We have a kinesthetic way of knowing the world through touch. We have a visual way of knowing the world. These ways seemed to be intuitive for people, we realized. The visual deals with analogy, the touch thing makes you feel more at home: you’re not isolated from things when you’re in physical contact with them. You’re grounded. The mouse essentially gave people the tiniest way of putting their hand in through the screen to touch the objects they were working with. Meanwhile, the screen became a theatrical representation that was hiding a truth that is much more complex, something users don’t want to think about: that a computer is a machine executing two million instructions per second.”

Alan and his friends now asked themselves, “What should the theatre of the screen be presenting?” At the time, it could have been anything. People now take for granted that there are “icons” and “windows”—that the computer makes an analogy to a physical desk you might be sitting at, in a room—but really it could have been anything at all. As Alan pointed out, “We read books, and we watch TV, and we talk on the telephone, with very little awareness of how these processes work, or how they might work differently. Once the applications of a technology becomes ‘natural,’ the battle to shape its uses and meanings are already, to a large degree, finished, and what were historically contingent processes are now seen as inherent to the medium. Take, for example, television. We all think we know what ‘television’ is. But that’s not what TV had to be. What could the computer be? That was the question we were asking. People felt alienated from computers. They knew they were alienated. The very clunkiness of computers in the 1970s created such a level of self-consciousness about the computing process that there was no way to pretend it all was happening ‘naturally.’ But this was to our advantage, as inventors.”

“From watching adults and children, we knew that people wanted to work on ‘projects.’ I especially liked to work on multiple projects at once, and people used to accuse me of abandoning my desk. You know, my work got piled up on one desk, so I’d go and use another desk. When I’m working on multiple things, I like to have one table full of all the crap that goes there, and another table full of more crap, and so forth, and to be able to turn to those various desks and access everything in the state in which I left it. This was the interface we wound up trying to model for the user: what if you could experience yourself as moving from one project to another—and could think of the screen as multiple desks holding all the tools needed for a particular project?”

“The problem we quickly encountered was that in many instances, the display screen was too small to hold all the information a user might wish to consult at once. So we developed the idea of ‘windows,’ or simulated display frames within the larger physical display. Windows allowed various documents composed of text, pictures, musical notation, dynamic animations, and so on, to be created and viewed at various levels of refinement. Once the windows were created, they could overlap on the screen like sheets of paper! When the mouse was clicked on a partially covered window, that window was redisplayed to overlap the other windows. The windows containing useful but not immediately needed information could be collapsed to small rectangles that were labeled with a name showing the information they contained. Then a ‘touch’ of the mouse would cause them to instantly open up and display their contents.”

The “window” is what Alan is best known for, and perhaps it is no surprise that when Microsoft came out with one of its earliest operating systems, they stole his idea and called it Windows. Alan didn’t care. The fun part was coming up with the idea, and someone who likes coming up with ideas is always already thinking about the next one.

“With this ‘window’ and mouse system,” Alan said, “users felt like they were sinking their fingers right through the glass of the display and touching the information structures directly inside. They loved it! Windows, menus, spreadsheets, and so on—these all provided a context, a stage, that allowed the user’s intelligence to keep on choosing the appropriate next step. In other words, to follow a story. And the story was their project.”

So that was software. But what of hardware? An engineer in the development of the personal computer who worked at Apple in the 1990s explained some of the thinking behind the development of the iMac. The iMac was the first personal computer to appeal to consumers in a completely new way, in a way that computer scientists until then had only dreamed of: the iMac finally made the computer un-scary.

This Apple engineer said, “We tried to make it simple and elegant. Instead of requiring users to connect a computer tower to a monitor, the iMac would come in an all-in-one shape, letting you simply plug it into the wall and get started. It was all about removing hurdles that might stop a newcomer in their tracks. That was all we talked about. Someone suggested it should come in a variety of bright, fun colors—which was a million miles away from the staid, stale designs of our competitors. Most computers at the time were grey or beige.” They even gave the colors sweetly childlike names: tangerine, lime, purple popsicle, penny whistle red, and blueberry blue. “We also made the outer shell translucent, so users could see the insides of the machine, removing their fearsome mystery.”

But perhaps the most important, appealing, and innovative detail, the one which truly brought the computer into the lives of the people and made it personal, was this: to the computer’s top, they added a handle.

At a conference in the late 1990s, Alan gave a speech in which he said, “A popular misconception about computers is that they are logical. Forthright would be a better term. On it, almost any symbolic representation can be carried out. Moreover, the computers’ use of symbols, like the use of symbols in language and math, can be sufficiently disconnected from the real world to enable computers to create all sorts of splendid nonsense! Although the hardware of a computer is subject to natural laws, the range of simulations a computer can perform with its software is bound only by the limits of the human imagination. For instance, a computer can simulate a spacecraft which travels faster than the speed of light. It can simulate pretty much anything.” Though everyone knew this, it was always exciting to hear it again.

“It may seem almost sinful to discuss the simulation of nonsense, but only if we want to believe that all of what we already know is correct and complete—and history has not been kind to people who subscribe to that point of view! In fact, this potential for manipulating apparent nonsense must be valued in order to assure the development of minds of the future.” This was Alan’s most closely held and heartfelt vision: that although programs that run on personal computers can be guided in any direction we choose, “the real sin would be to make the computer act like a machine.”

A decade and a half later, a chastened Alan spoke uneasily, looking around from an unexpected new present. Standing before a tech delegation, he noted that, “as with language, computer users are feeling a strong motivation to emphasize the similarity between simulation and experience; to conflate these things, and to ignore the very great distance between symbols (or models), and the real world. A feeling of power and a narcissistic fascination with the image of oneself reflected back from the computer is increasingly common.” People were “employing the computer trivially—simulating what paper, paint, and file cabinets can do; using it as a crutch, like having the computer remember things we can perfectly well remember ourselves; or using it as an excuse—blaming the computer for human failings.”

“But perhaps what is most concerning to me is the increasing human propensity to place faith in—or assign a higher power to—this hardware-based, code-reading, machine. We have made the user illusion too great. We have made it so great that the truth of what the computer actually is is no longer understood.”

Alan Kay had predicted wrongly what our collective sin would be: not that we would be so unimaginative as to forever treat the computer as a distant machine, but that the user illusion would grow so strong that it would come to seem like “apparent nonsense” that the computer essentially was a machine.

Theatre is everywhere present in the human world. Our minds play our lives for us like in tiny little theatres. And our computers are tiny theatres whose thrall we are in almost continuously. The engineers had worried about none of this. They had not anticipated that the physical theatres would grow ever more empty, as the spirit of theatre would enter and pulse within every personal computer.

*Read part one of this series, The Spirit in the Blue Light, here.

Excellent take

really really interesting